Parallel Tones

Upstate Art Weekend

@ Neuland

High Falls NY 2024

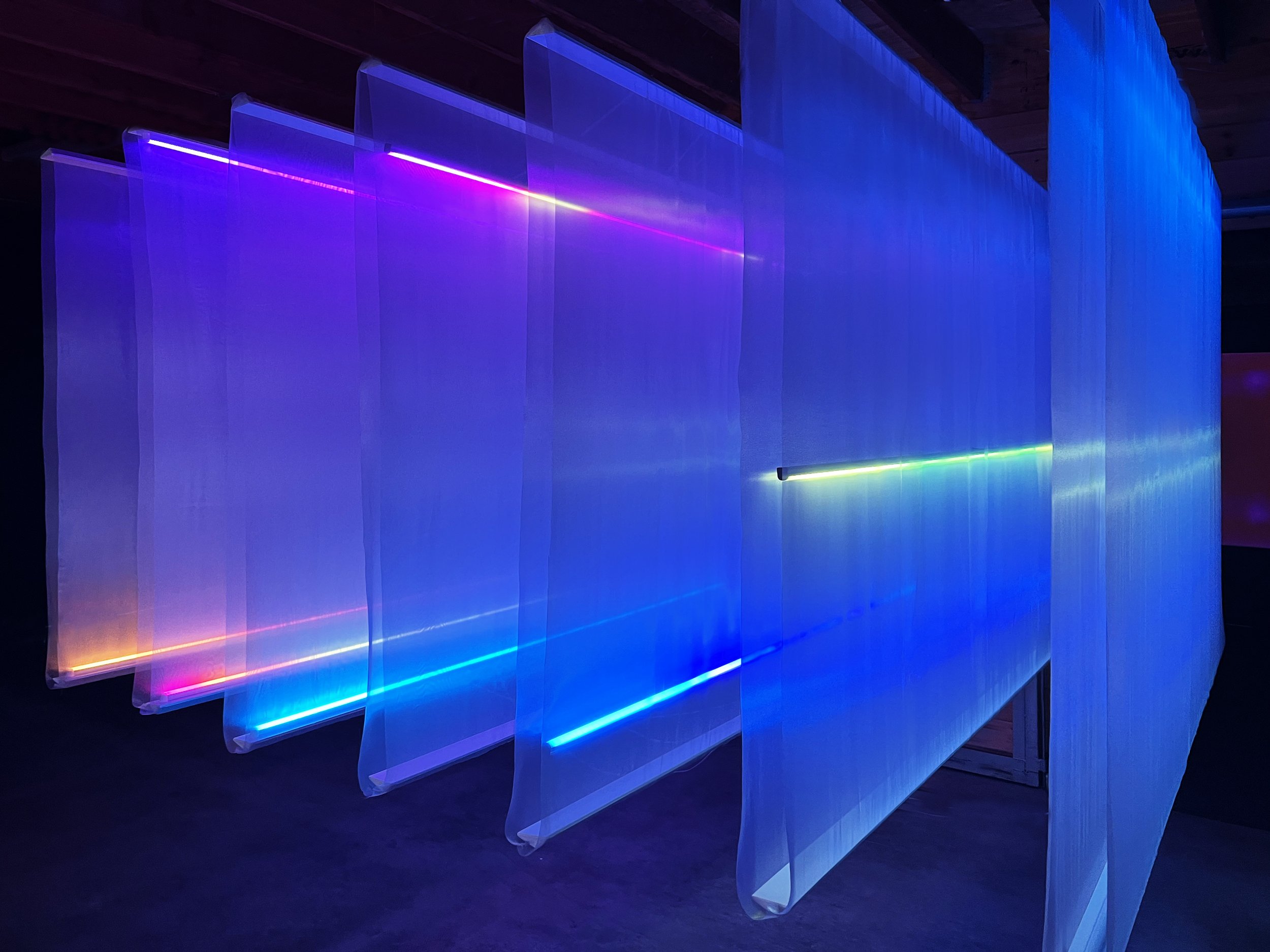

Parallel Tones is a generative light and sound sculpture. This installation consists of a series of interconnected works powered by the same generative, aleatory seeds and cascading data streams. Music triggers shifts in light and the works evolve together, one slow breath at a time.

Viewed from either end, this piece resembles a classic abstract painting, but allows the viewer to pull the layers apart and experience real-world color mixing, revealing a range of colors beyond what can be seen on canvas or computer screen.

As a musician and abstract painter for several decades I have always thought in “synesthetic” terms reaching for ways to create powerful interactions with sound & light. My process uses generative techniques with connected nodes, networked systems and specific compositional choices, allowing for controlled randomness within refined boundaries.

SETI Institute

Data Sonification

A first look at a collaboration with SETI Institute Astronomer Ann Marie Cody, PhD, capturing light data from distant stars. I’m using her data to control an algorithmic musical composition. Light levels are controlling several musical elements (pitch, harmonies, filters and effects). Essentially, as the light changes, piano arpeggios and other accompanying harmonic layers move up and down the keyboard playing notes within a predetermined key. Exciting to think of distant stars sending us light from all directions and getting to use it as a generative creative source. Light fluctuations can be caused by various factors such as rotational modulation of starspots, obscuration by dust particles and/or other stellar companions, and most exciting, strange anomalies that would be consistent with scientific theories of “futuristic” orbiting superstructures used by intelligent civilizations to harvest solar power. 😳 Whoa! We can learn a lot from light! This was first shown at SETI's Drake Awards (2025).

Amphora Projects

Waterforms @ 1Hotel, Surfcomber, Sagamore Hotels, South Beach, Miami, Art Basel / Miami Art Week 2024

Amphora Projects, the creative collaboration of artists Aaron Alden and Frankie Batista, examines the beauty and expression of water as both subject and medium. Their work focuses on immersive, aqua-kinetic video installations that capture water in ultra high frame-rate slow motion. The viewers presence displaces the waves. If no motion is detected the waves take over again. This has the subtle but ominous message:

If we do nothing, we will disappear.

This exhibit was created to shine a light on the environmental efforts of Blue Action, VolunteerCleanup.org, Resilience Creative, The City of Miami, and The City of Miami Beach with tech contributions from Luxedo. This interactive exhibit is powered by TouchDesigner and based on a frame-comparator from a simple webcam.

Parallel Tones

v2 Motion Reactive

Variations of this evolving work have been shown @ Satellite Art Show Williamsburg Brooklyn 2024, Upstate Art Weekend @ Neuland High Falls, NY 2024, & Subjective Art Fest @ LUME Studios Tribeca, NYC 2024.

This latest piece from my Parallel Tones series consists of six LED strips hung behind a single layer of translucent fabric, paired with six corresponding motion sensors. The composition is altered with the viewer’s movement as each trigger causes the corresponding LED strip to change colors and adjust the length of illuminated pixels, creating an artificial sense of depth.

This iteration features modified code that randomly chooses light strips to fade out. This added a surprisingly dynamic new element as the shadows cast by the unlit strips create subtle additional gradations. As with much of the work I've been making recently, I consider this both a finished piece and a prototype for scalable / site-specific / architectural installations. Controlled by a self-contained microprocessor, once installed, this piece can run indefinitely and the code can be updated wirelessly.

To make sure motion is accurately detected only directly in front of each of the 6 motion sensors, I made several rounds of improvements to 3D printed parts. Starting with a simple tube I was getting many false triggers. Luckily Blender let me get virtually small and climb inside to see what was causing this. Reflections from the side of the tube were making the image sensor think it was seeing motion in front of the lens when objects were actually off to the sides. I changed the design several times to minimize any reflected light bouncing off the tube resulting in more consisting head-on triggers.

Interested in building something similar in your space? Let’s talk!

Weather Brush

Data visualization

Hudson Valley NY

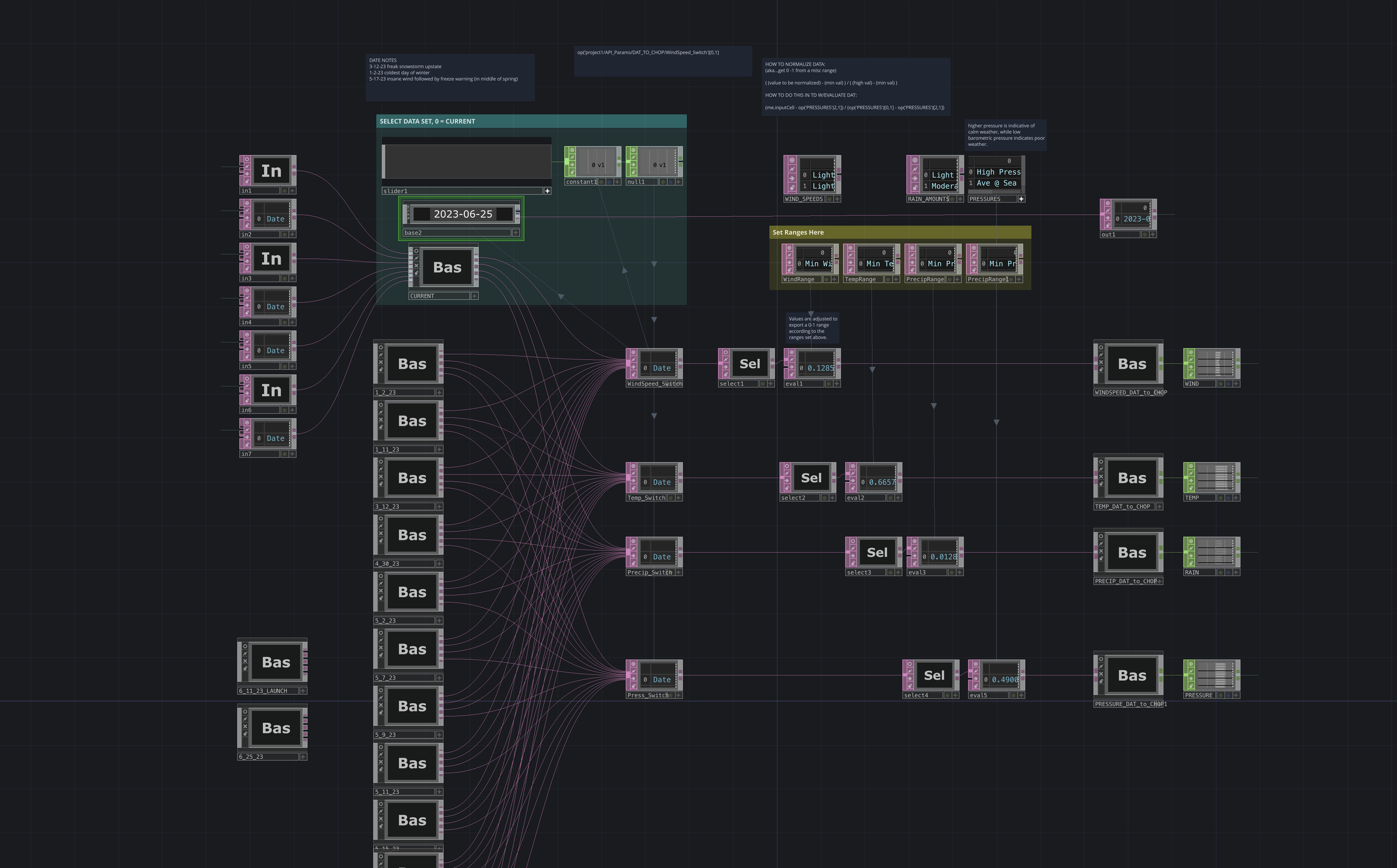

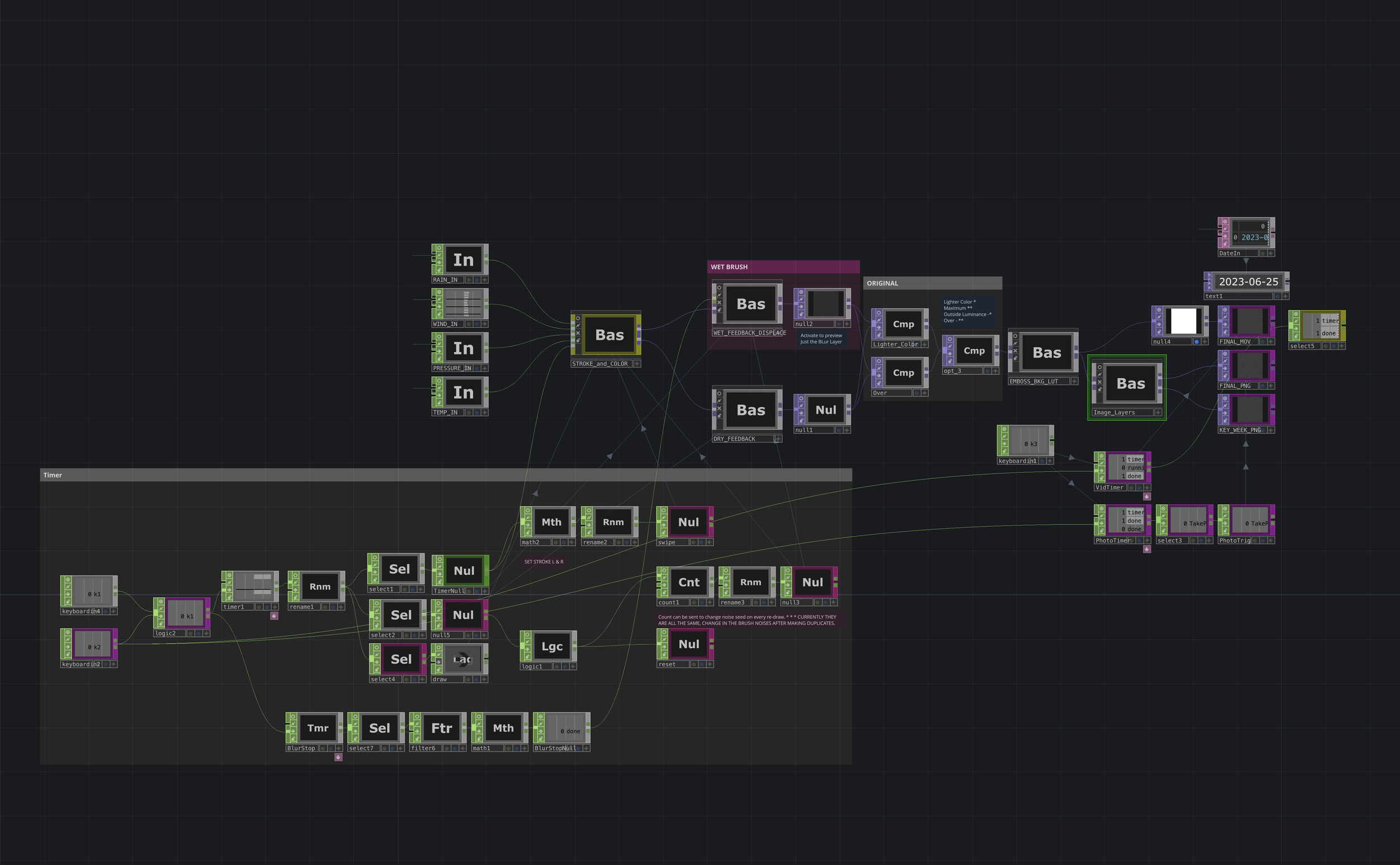

A weekly weather forecast visualized as digital generative art.

>INSTAGRAM

One full week’s weather is represented (left to right) showing 10 weather stations along the Hudson Valley from Albany to NYC (top to bottom). The motion resembles one large brush stroke, but is made of 10 sections independently responding to hourly temperature, wind, precipitation, and barometric pressure.

Read more about this project on TouchDesigner’s showcase site.

Thanks to the Derivative team for featuring me!

For TouchDesigner-ers, Here’s a look at how the document was built. There are 4 main Components: Weather API collection, Data Cleaning / Parsing, DAT to CHOP, Then painting. It’s an insanely complicated file and I’ll be making efficiency improvements to the Data side as I push ahead.

Noise Fields

Generative

p5.js sketch

Exploring ways of drawing with code (p5.js) to see how subtle changes in just a few variables can lead to dramatically different results and endlessly produce interesting variations. These were all generated by the same simple set of coded instructions with only a couple numbers altered. Sometimes minimal and constrained, resembling my abstract paintings from the 90’s / early 2000’s, nodding to Rothko, Motherwell, Richter etc. And then glitched, chaotic, dynamic but still poetically structured. So exciting to see how responsive and expressive it can be. I was planning to release this on FXHash with adjustable user params. But we’ll see… everything is pretty dismal in web3 these days. Either way these explorations are helping to develop bigger ideas (that I can’t mention yet) turning data into art.

Kinetic Mirrors

@ Volvox Labs

Volvox Labs

Brooklyn NY

TouchIn 2024

Volvox Labs invited me to be a guest artist for TouchIn 2024 in their Brooklyn Studio. They let me take over their existing kinetic installation of 5 LED panels and 5 columns of motorized mirrors. I created an adaptation of "All of the Colors All of the Time" and built a brand new audio analysis patch that can autonomously adapt to any music (a live feed from the DJ in this case) find the rhythm of a track and use the beat to push the work forward in sync.

To control the motorized mirrors I programed a variety of patterns from beat-driven waves, “sample & hold” patterns (where a random position is chosen and the other mirrors align in sequence), and movements based on 5 audio frequency bands. A counter listens for 16 beats and then moves on to the next pattern. The result is an endlessly evolving generative kinetic work where mirrors reflect chromatic refractions, like a life-size kaleidoscope.

Really amazing experience. So happy to be invited to come play with this team of legends. The TouchDesigner meetup was absolutely epic, the room was full of so many of my heroes and everyone was so friendly and excited for what we are all building. Everyone there became instant friends and I look forward to seeing everyone again soon.

Thank you Volvox, Derivative and TouchDesigner community.

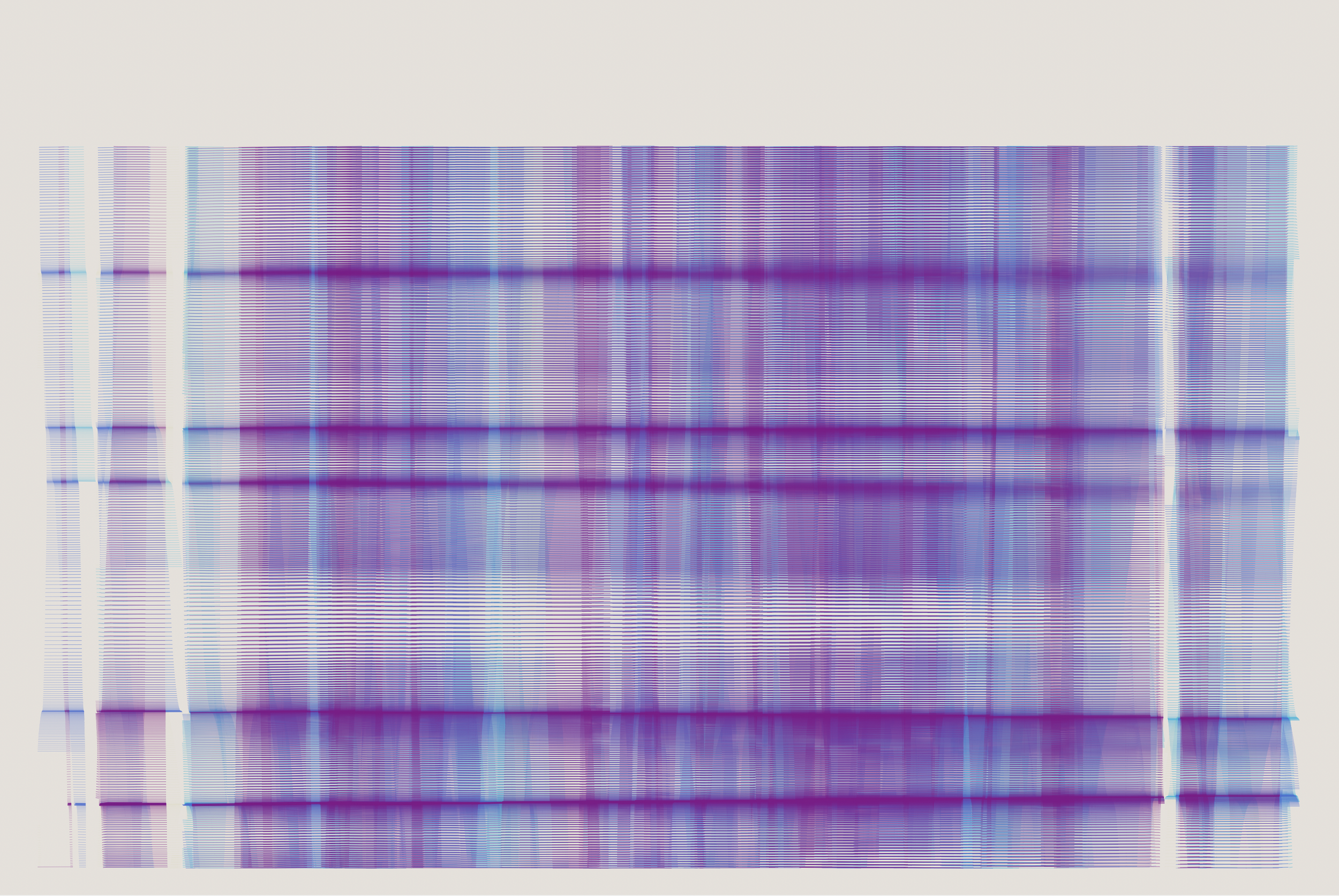

p5.js + Pen Plotter

Axidraw SE A1

Building a new p5.js project around a simple structure of parallel lines, offset by overlapping noise displacements. Subtle tweaks to the variables result in a variety of distinct styles/genres. I’m thinking of making this a user-controllable release.

Fragmented Depictions

Photogrammetry / 3D Modeling

A new series of photogrammetry scans transformed into 3D space. This series explores enigmatic forces of light and dark, and existence within multiple simultaneous states of being.

Fjallsárlón

Recursive Reflections

@ LUME Studios Tribeca NYC

curated by Clay Devlin

Frieze 2023

Fjallsárlón was created from neural radiance field (NeRF) video files captured during drone flights over Iceland’s lesser-known glacier lagoon and the tongue of the enormous Vatnajökull glacier. This piece is a compilation of several individual video loops, taking viewers on a journey through exploded 3D geometry. While 3D photogrammetry and LIDAR rapidly integrate into our daily lives, I enjoy using the quirks and artifacts of this technology to showcase dramatic deconstructed landscapes. The fractured icebergs are remnants of centuries past. Here, they are incorporated into current discussions surrounding technology which is evolving in ways we cannot yet fully understand—perhaps contributing to positive evolution or perhaps signaling irreversible destruction.

Waveforms

S¿bjective Art

@ LUME Studios Tribeca

NFT NYC 2023

curated by Raina Mehler,

Director @Pace Gallery.

Waveforms is an endlessly evolving generative digital work developed to imitate my own style of IRL acrylic paintings. Created in TouchDesigner.

Landscaper

CADAF 2022

Crypto & Digital Art Fair

@ WEB3 Gallery 5th Ave NYC. Curated by Elena Zavelev & Andrea Steuer

Landscaper is a generative art project which constructs infinite topographic outcomes. The works are a collaboration between the artist, the viewer, the computer, and random “forces of nature”. Using physical controls to adjust certain pre-selected variables, viewers @ CADAF 2022 in NYC influenced the outcome of numerous iterations until they discover their perfect piece of this infinite world. Final works are available on objkt.com.

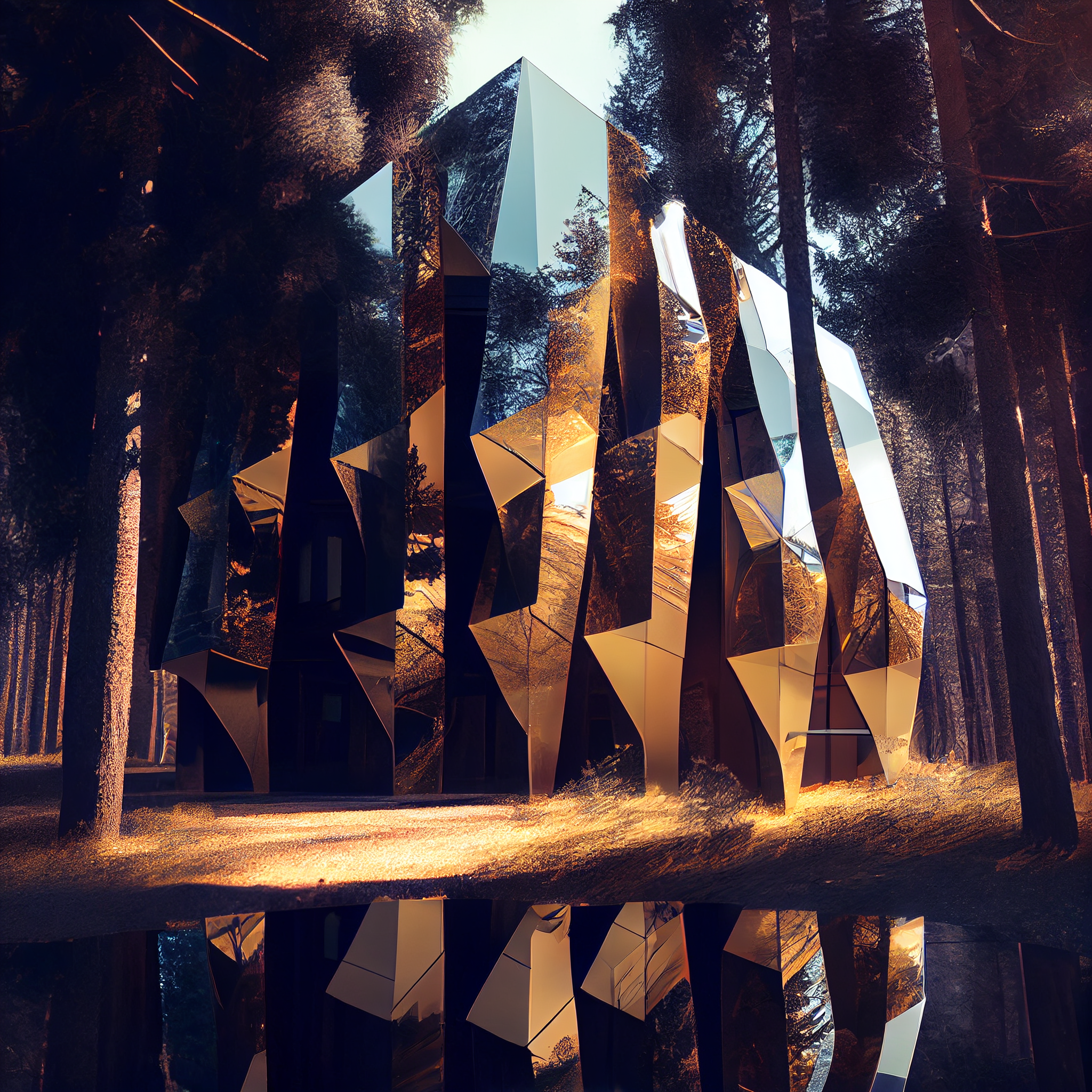

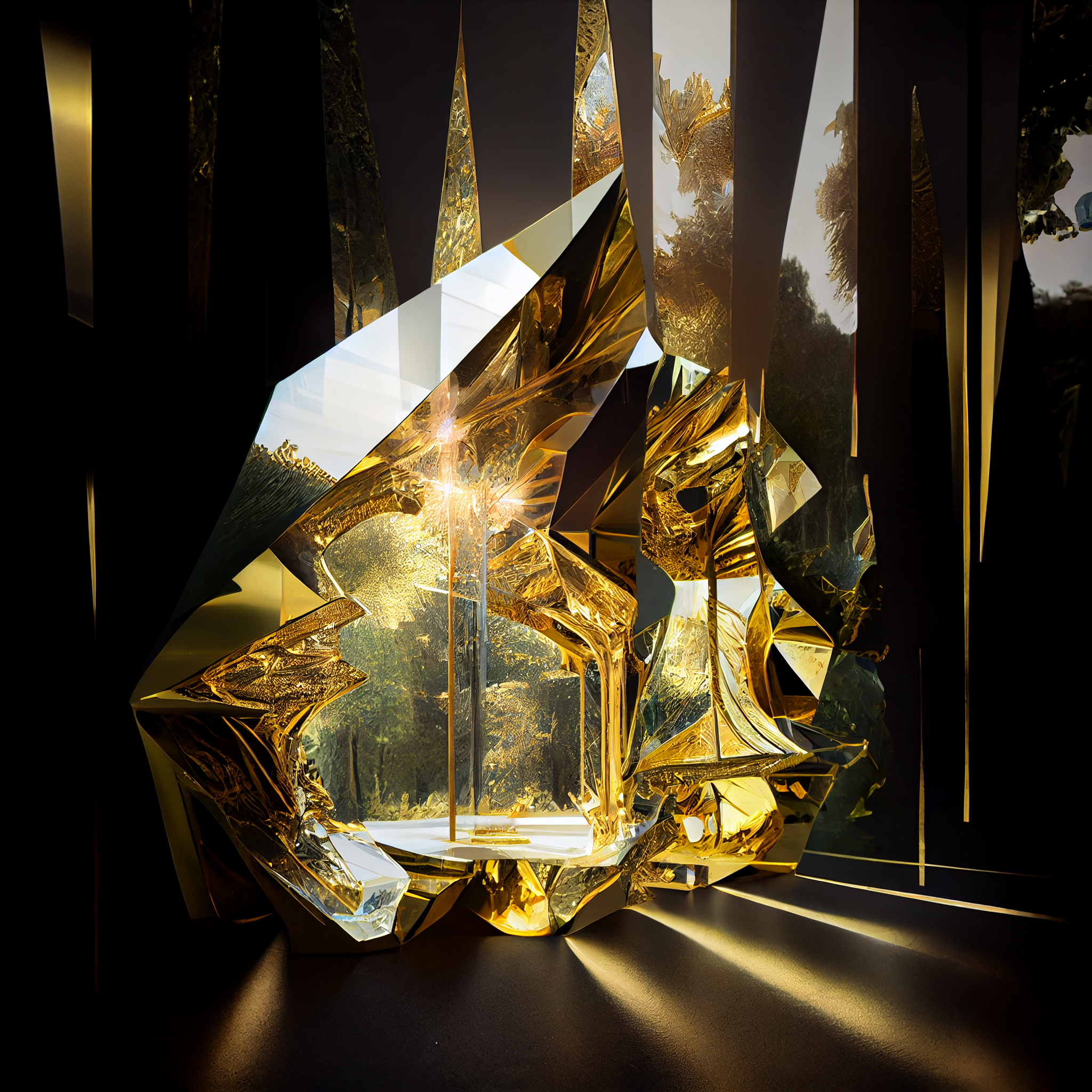

Midjourney

Architecture

& Design

Experiments

My opinions on AI change every day. I’m learning to code with ChatGPT which feels like an absolute super power. I worry about the negative impact of AI models trained on other people’s work and the likelihood of it irreversibly changing things we can’t possibly fully understand. But maybe it also cures cancer? How long will it be until we can talk to birds? For now, I’m cautiously optimistic for what it can do to help my workflow, widen my creative vision, teach me new skills, and pump out pretty jpegs of sci-fi / hypermodern gold architecture.